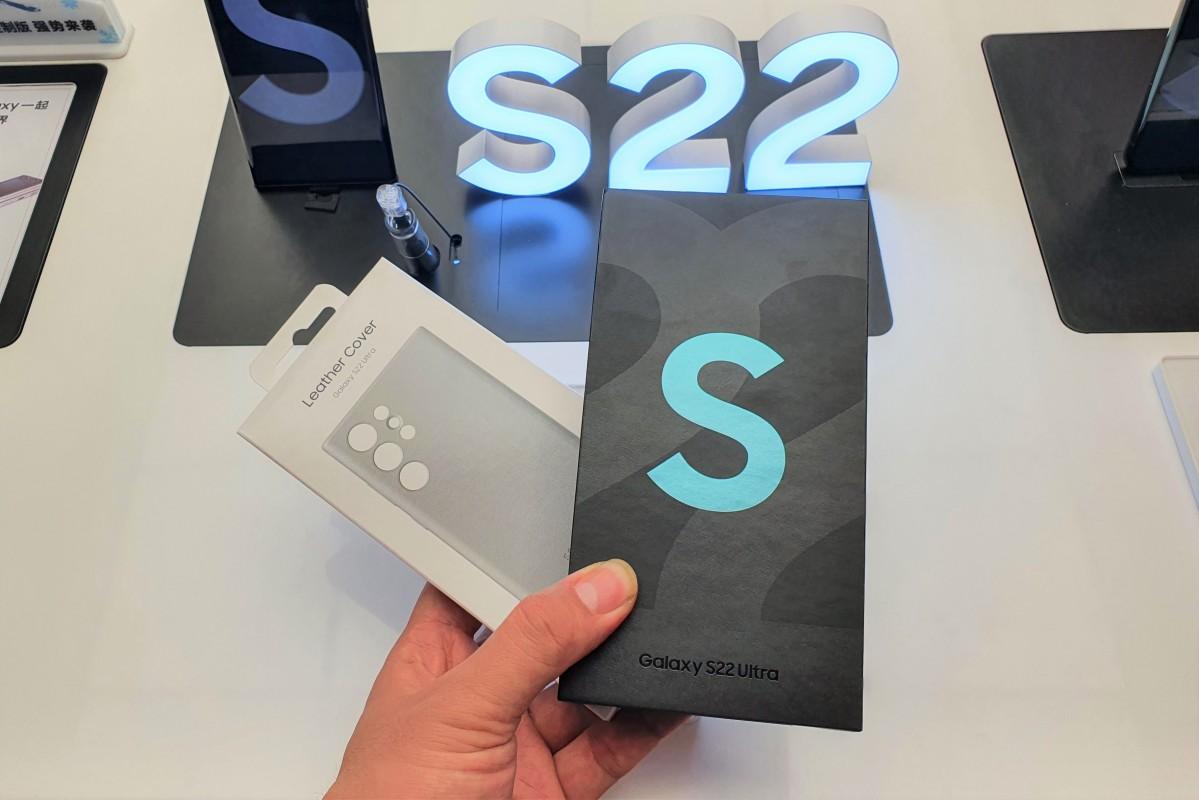

(Continued from last time) Nearly all major Android smartphone makers introduced cameras with higher resolution and higher zoom capabilities throughout 2020. Samsung already has a 108-megapixel camera in several models, and a 200-megapixel sensor is coming soon. Meanwhile, Apple has bucked the trend by keeping the iPhone 12 Pro's camera at 12-megapixel resolution and zooming between 0.5x and 2x.

As a result, except in low light, you will hardly notice the difference between an image taken with the new model and an image taken with the iPhone 11 Pro. The iPhone 12 Pro is better at gathering and processing light than before, thanks to improvements in the lens and software, so photos taken at night or in dimly lit rooms look as if they were taken at dusk instead of total darkness. to create a colorful finish.

For professional creators, or at least those who don't just stick to high-end cameras, but also shoot with iPhones, Apple has also added Dolby Vision HDR recording and Apple ProRAW features. This ultimately improves the amount of visual data recorded by the camera. You can also edit the image afterward for optimal color and brightness on supported displays.

Some videographers will start using these features, but only TVs with Dolby Vision Profiles 8.4 or higher fully support them, and tools for ProRAW are now available. It's still early days, so it'll be a while before these two features start impacting more users.

The iPhone 12 Pro also adds a Lidar 3D scanner to the back. This is a feature that was introduced with the iPad Pro 2020 earlier this year. Located alongside the rear camera, the iPhone's lidar scanner is smaller in size than the iPad's, but has roughly the same functionality. Uses invisible lasers to create dynamic 3D maps of anything you specify. For now, these 3D maps can be used to improve the speed and accuracy of real-time AR rendering with the rear camera, as well as better autofocus in low light.

Lidar could be the iPhone 12 Pro's biggest advantage over its competitors. For now, Apple doesn't support this feature in most of its own apps, but a third-party app called Polycam shows how to combine lidar with a camera to 3D scan an object or space. I used the Polycam this weekend and spent less than 3 minutes total scanning and processing time importing an entire room into digital space and using drag and pinch-out gestures to rotate and zoom the 3D data. saw.

Despite the novelty of doing near-instantaneous 3D scanning on smartphones, people who have seen the 3D models I have created with Polycam have all said, 'Amazing, but what can you do with these 3D models? can you do it?" Sure, adding new hardware is nice, but most people want working software and examples of how to use it. Apple, it's your turn now. (Continued next)

[via VentureBeat] @VentureBeat

[Original]

BRIDGE operates a membership system "BRIDGE Members". BRIDGE Tokyo, a community for members, provides a place to connect startups and readers through tech news, trend information, Discord, and events. Registration is free.Free member registration